Introduction

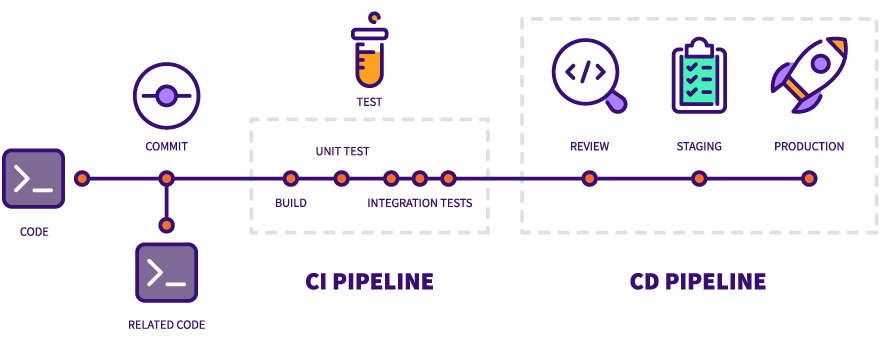

GitLab CI/CD has a powerful but somewhat underdocumented pre-build script feature that allows us to execute custom logic before builds are run on a GitLab runner. We’ll have a look at how to utilize the pre-build script to automate Docker system clean-up on GitLab runners in this one.

GitLab runners and the problem of automated clean-up

Imagine we work on a project using GitLab CI/CD with a set of demanding criteria such as

- high degree of automation,

- need for high runner uptime,

- fast build execution, and

- frequent use of Docker.

Particularly due to the lack of downtime for clean-up and maintenance, we may eventually run into the well-known problem that GitLab runners will run out of disk space.

Unfortunately, finding a general solution for GitLab runner clean-up is not particularly easy as indicated by the existence of this, to date, unresolved issue on the GitLab runner issue tracker. For instance, if we simply clean up all Docker resources after each build, we won’t likely run out of disk space. However, our build times would be much higher because Docker won’t be able to leverage its build cache mechanism.

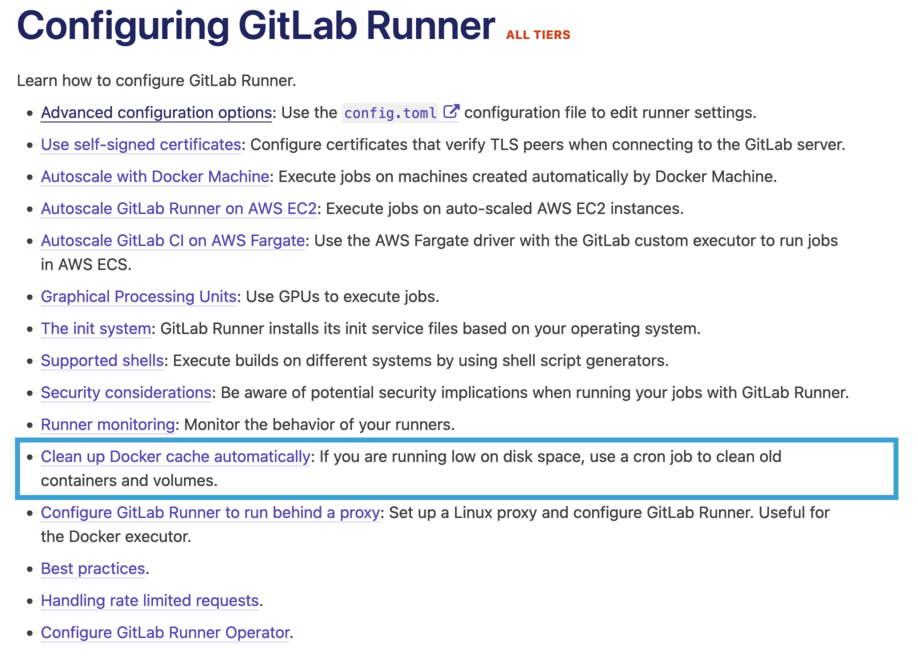

Meanwhile, GitLab’s documentation recommends running the clear-docker-cache script once a week via cron as a workaround. Using the cron approach is also fairly simple and will moreover slow down our builds less frequently. On the flip side, however, we will now have to provide our runners with sufficient disk space for a full week (or, whatever interval the cron job is set to run on) which might be excessive and is moreover hard to guess correctly.

As noted in the unresolved issue that I mentioned earlier, the GitLab suggested way of managing disk space also has at least two more problems:

- it only addresses images, and it is

- indiscriminate meaning that it may end up cleaning up frequently used images as well.

This is problematic for a couple of reasons. First of all, other cached Docker resources—like volumes and containers—are not targeted by the clean-up script. Moreover, it may result in a build slow-down because frequently used images are being cleaned up and have to be rebuilt. Finally—since the script is run by cron—, there also is the intricate problem of race conditions between the clean-up script and build jobs: since cron runs asynchronous to build job executions, our clean-up job may inadvertently crash pipelines because it cleans up images some jobs depend upon. 1 This problem is of course mitigated by running the clean-up script only once a week but developers will have to keep in mind that pipelines may fail once in a while due to missing images.

Determining Docker disk usage and cleaning up Docker cache

So, what to do? First of all, let’s look at what tools are available to determine Docker disk usage as well as trigger clean-up of resources that occupy disk space.

To get an indication of the current disk usage of Docker, we can run docker system df. Here’s an example output from my local machine:

$ docker system df

TYPE TOTAL ACTIVE SIZE RECLAIMABLE

Images 2 2 216.6MB 0B (0%)

Containers 2 2 84.83kB 0B (0%)

Local Volumes 5 5 506.7MB 0B (0%)

Build Cache 0 0 0B 0BAs we can see, Docker helpfully lists the disk space taken up by each of its resource classes.

With some light BASH acrobatics, we can work this into a test that tells us if Docker disk space usage is below a given limit.

Schematically, we can run this script in BASH as follows to trigger our clean-up logic:

# Check if docker disk space usage is above a given limit and run clean-up logic if it is

if is_docker_disk_space_usage_above_limit "${docker_disk_space_usage_limit}"; then

# run clean up

fiIf Docker uses too much disk space, we may then proceed to remove unused resources via the Docker CLI. The most simple way to do this is by running docker system prune -af --volumes. Pruning the system with these parameters will simply clean up all dangling and unused images, containers, networks, as well as volumes.

In case we need a more elaborate clean-up logic, Docker CLI also has individual commands to free the cache of unused images, containers, networks, and volumes which support filters. E.g. docker image prune can be used to only clean up images that are older than 24 hours by running

docker image prune -a --force --filter "until=24h"Runner hooks to the rescue

Now that we have a way how to find out if Docker is running out of disk space and how to trigger clean-up, we can think about when to run our logic. Per the requirements of our GitLab CI/CD set-up—as stated earlier—, we want to run

- custom clean up logic,

- pre-emptively (to avoid runner failures due to lack of disk space), and

- in sync with pipeline execution (to prevent randomly failing pipelines).

Luckily, GitLab CI/CD provides a couple of script hooks that let us execute code in various stages of pipeline execution. Specifically, pre-clone, post-clone, pre-build, and post-build (see The [[runners]] section in Advanced Configuration).

Both the pre-build as well as the post-build hooks make sense in our scenario as both

- run synchronous with pipeline jobs (either before or after a job),

- pre-emptively allow us to clean up resources (either before the current job or the next job), and

- provide a mechanism to define custom clean-up logic.

We choose the pre-build hook here.

To register our pre-build script, we have to configure our GitLab runners using their configuration file like so:

# /etc/gitlab-runner/config.toml

# ...

[[runners]]

# ...

pre_build_script = '''

# execute clean-up script

'''Having added the pre_build_script property, our GitLab runners will now execute our clean-up script before each job.

This is unfortunately not a perfect, general solution either—as will be discussed later in Prerequisites and Limitations of the Pre-build Script Approach—but let’s look at how to implement the pre-build clean-up technique first.

A quick test drive

Installing Docker and gitlab-runner

To test our setup we will install and configure GitLab runner on an AWS EC2 instance. (Obviously, any other similar cloud infrastructure as a service solution would work too.) GitLab runner binaries are available for multiple platforms. We pick an Ubuntu 20.4 machine here to use the Linux binaries.

After logging into our EC2 instance

ssh -i "${key-pair-pem-file}" "${ec2-user}@${ec2-instance-address}"we first want to install Docker. E.g. using the official convenience install script

curl -fsSL https://get.docker.com -o /home/ubuntu/get-docker.sh

sudo sh /home/ubuntu/get-docker.sh

Note that using the convenience script is not recommended for production builds. Neither is it generally a good idea to execute a downloaded script file with sudo. But since we trust the source here and only run a test, it’s not a big deal.

Verify Docker installation success by running e.g. docker --version

$ docker --version

Docker version 20.10.16, build aa7e414Now let’s execute the script shown below to install gitlab-runner. 2

sh <<EOF

# Download the binary for your system

sudo curl -L --output /usr/local/bin/gitlab-runner https://gitlab-runner-downloads.s3.amazonaws.com/latest/binaries/gitlab-runner-linux-amd64

# Give it permission to execute

sudo chmod +x /usr/local/bin/gitlab-runner

# Create a GitLab Runner user

sudo useradd --comment 'GitLab Runner' --create-home gitlab-runner --shell /bin/bash

# Install and run as a service

sudo gitlab-runner install --user=gitlab-runner --working-directory=/home/gitlab-runner

sudo gitlab-runner start

EOFAnd then let’s register our runner with GitLab by running

sudo gitlab-runner register The gitlab-runner tool will guide us through the process and ask for some configuration details. We have to enter the URL of our GitLab instance (e.g. https://gitlab.com for the public GitLab) and a registration token (that can be copied from Settings > CI/CD > Runners > Specific runners). We moreover pick the Docker executor (docker) since it is—at least in my experience—the most common one as well as the docker:20.10.16 image to be able to run Docker builds within our pipeline jobs. 3 Also, when prompted for tags, we enter gl-cl to be able to run our pipeline jobs on exactly this machine.

At the end of the registration process, we should see the following confirmation that our runner has been registered.

Runner registered successfully. Feel free to start it, but if it’s running already the config should be automatically reloaded!

As described in Advanced configuration, the configuration file is stored in /etc/gitlab-runner/config.toml on Unix systems. We will add the runners.pre_build_script and runners.docker.volumes properties shown below.

# /etc/gitlab-runner/config.toml

# ...

[[runners]]

# ...

pre_build_script = '''

sh $CLEAN_UP_SCRIPT

'''

# ...

[runners.docker]

# ...

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]The pre_build_script property uses a little trick and simply executes a reference to a script file path CLEAN_UP_SCRIPT which we will later add as a GitLab CI/CD variable of type file. By doing that, we can apply changes and test our clean-up script without having to connect to our runner. The volumes property mounts the host machine’s Docker socket in our container so we clean up resources on the host machine rather than only in the container (Docker in Docker via Docker socket binding).

Configuring our GitLab CI/CD pipeline

The file variable CLEAN_UP_SCRIPT has to be defined in the Settings > CI/CD > Variables section of our project for this to work. As shown in the next section. Let’s add the following clean-up script for now.

set -eo pipefail

apk update

apk upgrade

apk add bash curl

curl https://gist.githubusercontent.com/fkurz/d84e5117d31c2b37a69a2951561b846e/raw/a39d6adb1aaede5df2fc54c1882618bcea9f01e0/is_docker_disk_space_usage_above_limit.sh > /tmp/is_docker_disk_space_above_limit.sh

bash <<EOF || printf "\nClean-up failed."

source /tmp/is_docker_disk_space_above_limit.sh

if is_docker_disk_space_usage_above_limit 2000000000; then

printf "\nRunning clean up...\n"

docker system prune -af --volumes

else

printf "\nSkipping clean up...\n"

fi

EOFMake sure to select File as Type when defining the variable.

Note that we install bash and curl in the pre-build script for simplicity’s sake. This implies that both tools are installed before every job that is processed on this runner. In a real scenario, we’d naturally want to provide a custom image that has all the required tools installed to speed up the pre-build script’s execution.

Now let’s add a sample gitlab-ci.yaml to our project which creates a large one GB image and will therefore eventually trigger clean-up. (Code is available on GitLab.)

stages:

- build

build-job:

stage: build

tags:

- gl-cl

script:

- echo "Generating random nonsense..."

- ./scripts/generate-random-nonsense.sh

- echo "Building random nonsense image..."

- ./scripts/build-random-nonsense-image.shTo limit our pipelines to our new runner, we use tag selectors and pick our previously registered runner via the gl-cl tag. Now we may finally run our pipeline a couple of times to see the effect of our pre-build script. Depending on the runner’s disk size, we should see a couple of jobs without clean-up followed eventually by a run that contains a log similar to this one:

Docker disk space usage is above limit (actual: 2361821460B, limit: 2000000000B) Running clean up… Deleted Containers: 9a126aead174a15a4f76f2cb5744e36aff30741cc6ab0ac5044837aaee946496 2fa51dc3e7f5b1e3bec63a635c266552f9b02eb74015a9683f7cbf13418a12eb

Deleted Images: untagged: registry.gitlab.com/gitlab-org/gitlab-runner/gitlab-runner-helper:x86_64-febb2a09 untagged: registry.gitlab.com/gitlab-org/gitlab-runner/gitlab-runner-helper@sha256:edc1bf6ab9e1c7048d054b270f79919eabcbb9cf052b3e5d6f29c886c842bfed deleted: sha256:c20c992e5d83348903a6f8d18b4005ed1db893c4f97a61e1cd7a8a06c2989c40 deleted: sha256:873201b44549097dfa61fa4ee55e5efe6e8a41bbc3db9c6c6a9bfad4cb18b4ea untagged: random-nonsense-image-1653227274:latest deleted: sha256:67fde47d8b24ee105be2ea3d5f04d6cd0982d9db2f1c934b3f5b3675eb7a626f deleted: sha256:1a310f85590c46c1e885278d1cab269f07033fefdab8f581f06046787cd6156e untagged: alpine:latest untagged: alpine@sha256:4edbd2beb5f78b1014028f4fbb99f3237d9561100b6881aabbf5acce2c4f9454 untagged: random-nonsense-image-1653226909:latest deleted: sha256:b5923f3fb6dd2446d18d75d5fbdb4d35e5fca888bd88aef8174821c0edfcb87f deleted: sha256:59150b0202d2d5f75ec54634b4d8b208572cbeec9c5519a9566d2e2e6f2c13f3 deleted: sha256:0ac33e5f5afa79e084075e8698a22d574816eea8d7b7d480586835657c3e1c8b

Total reclaimed space: 2.059GB

This output indicates that our pre-build script was executed when Docker’s disk space usage reached a value above 2GB and clean-up was triggered successfully (freeing in this case roughly 2GB of disk space).

Prerequisites and limitations of the pre-build script approach

It’s probably easy to see that our pre-build script approach fulfills the requirements we laid out for it. I.e. it

- runs synchronously before pipeline jobs, it

- pre-emptively cleans-up unused resources, and it

- allows us to provide a custom clean-up logic.

Nonetheless, there are still a few limitations left to consider.

First of all, bash must be available during pre-build script execution if we want to use the is_docker_disk_space_usage_above_limit.sh script because it uses some BASHisms. Moreover, since we use the Docker executor, we need to use some kind of runner image that has the Docker CLI installed (such as the official Docker base image we used in our test earlier). Writing a custom image to use as the base image to run our pipelines takes care of and reduces the severity of this problem, but it’s still something that has to be addressed.

Another thing to keep in mind is that using Docker disk space usage is only an approximation (i.e. lower than) the system disk space usage. Consequently, we have to find a good value for the limit that triggers our clean-up logic to not run out of disk space on the machine anyway.

Also, it may still be tricky to pick the right clean-up logic. For instance, if we just run the docker system prune -af --volumes as in our test, we may delete images that are required by subsequent jobs in more complex pipelines. Excluding certain images from clean-up—for instance, those built in the last 24 hours—may be able to alleviate this exact problem. However, in more complicated pipelines, we will likely need a more elaborate clean-up logic.

Lastly, there are still edge cases even with the pre-build clean-up script approach where our runners will run out of disk space. Off the top of my head, if the limit is too high, a runner might still end up running out of disk space because the job run produces more resources than available free space.

Summary

As we’ve seen, we can use GitLab CI/CD’s pre-build script hook to clean up GitLab runners in sync with job execution, pre-emptively to avoid breaking pipelines, as well as using custom clean-up logic. That being said, the pre-build script clean-up approach is not perfect, because it cannot avoid all situations where a runner will run out of disk space. Nonetheless, I think it is still a more elegant way to handle clean-up of GitLab runners than maintenance downtimes or the cron job approach.

For example if we build our images and push them to our registry in separate jobs.↩︎

The GitLab runner installation script is also available from Settings > CI/CD > Runners > Specific runners of a GitLab project for reference.↩︎

Full disclosure, I tried the SSH executor for simplicity’s sake but was not able to make it work due to connection problems.↩︎